"We're considering building our own AI solution internally." We hear this increasingly often from companies wanting control over security and AI usage. Understandably so, when something is so critical to the business, it is natural to want full control. But let us take a closer look at what this really entails.

The temptation to build in-house

It is easy to understand the appeal:

- Full control over the technology

- Customized to your needs

- No dependency on external vendors

- Security handled internally

On paper, it sounds perfect. In reality? It is a completely different story.

Reality: AI development at lightning speed

The AI landscape is not just changing rapidly - it is accelerating. Think about what has happened in just the past year:

- January 2024: GPT-4 is state-of-the-art

- March 2024: Claude 3 surpasses GPT-4 in several areas

- June 2024: New multimodal models launch

- September 2024: Local models become powerful enough for enterprise use

- December 2024: Entirely new AI paradigms are introduced

How can an internal development team keep pace with this?

"CompanyGPT" - When ambitions meet reality

Many companies start with good intentions: "We'll create our own ChatGPT on Azure with OpenAI!" But what happens in practice:

Months 1-3: Enthusiasm

- Team is assembled

- Azure OpenAI is set up

- Simple chat interface developed

- "Look, we have our own ChatGPT!"

Months 4-6: Reality sets in

- Users miss features from "real" ChatGPT

- No file handling

- No image analysis

- No advanced formatting

- No plugins or integrations

Month 7+: Shadow IT flourishes

- Employees use ChatGPT Plus in secret

- "CompanyGPT" sits unused

- IT department spends time blocking "unauthorized" AI use

- Innovation is stifled

The hidden costs of in-house development

1. The talent war

AI experts are among the most sought-after talents in the job market:

- Salary levels: Top AI developers demand million-dollar salaries

- Recruitment: Can take 6-12 months to find the right expertise

- Retention: Constant risk of talent being headhunted

2. Development resources

Even with Azure OpenAI, you must:

- Develop user interfaces that match expectations

- Build authentication and access control

- Implement document handling

- Create search functionality

- Handle versioning and updates

3. Continuous development

- New features: OpenAI launches new functions weekly

- User expectations: "Why doesn't our version have this?"

- Technical debt: Always one step (or ten) behind

4. Support and maintenance

- User training: Continuous need

- Bug fixes: Issues and problems

- Documentation: Must be kept updated

Why "light versions" fail

Users' perspective:

Public ChatGPT Plus:

- Access latest models immediately

- Image analysis and generation

- Advanced code assistance

- File handling

- Plugins and integrations

- Continuous improvements

CompanyGPT (typical):

- Basic chat

- Limited file support

- No image handling

- Slow updates

- Frustrating limitations

Is it any wonder users choose the former?

What users actually need

While IT builds their "light version," users want:

- Full functionality like commercial solutions

- Integration with company data

- Security without compromising functionality

- Quick access to new features

- Professional user experience

The Platform Approach: Best of both worlds

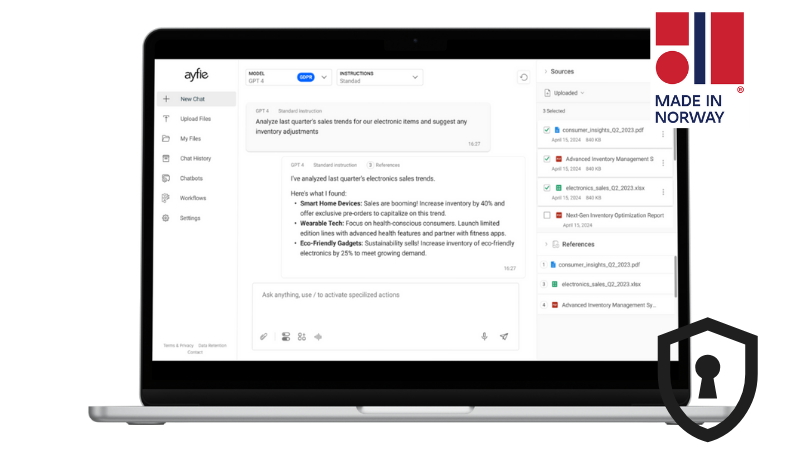

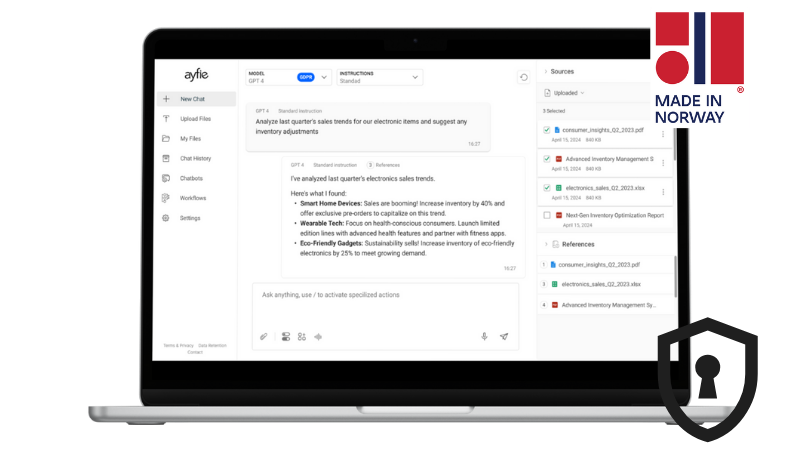

Instead of building a half-hearted copy, consider using an established AI platform like, Ayfie Personal Assistant:

You will immediately get:

- Support for all leading LLMs (not just OpenAI)

- Professional user interface

- 550+ file formats handled

- Enterprise-grade security

- Continuous updates that match the market

What you can focus on:

- Integration with internal systems

- Custom workflows

- Industry-specific solutions

- Value creation for users

The uncomfortable truth about CompanyGPT projects

Based on experience, we often see this pattern:

- Big fanfare: "We're building our own AI!"

- MVP launches: Basic chat functionality

- User disappointment: "Is this all?"

- Shadow IT: Employees use external services

- Project zombie: Lives on paper, dead in practice

Real examples

Success story: Technology company

- Chose: Ayfie platform with custom integrations

- Time to value: 2 months

- User adoption: 85% active users

- ROI: Positive after 6 months

Learning experience: Consulting firm

- Chose: Build own "CompanyGPT" on Azure

- Status after 12 months:

- 15% user adoption

- 70% still use ChatGPT Plus privately

- Constant "feature gap" with public solutions

- Estimated loss: Millions in development costs + lost productivity

When in-house development might make sense

There are exceptions where building your own might be right:

- You have completely specific needs that no platform covers

- Regulatory requirements forbid all external solutions

- You have unlimited development resources

- AI is your core business

For the vast majority, none of these apply.

The way forward: Smart partnership

The smartest approach:

- Choose a professional AI platform that keeps pace with the market

- Focus on integrations that provide unique value

- Let the platform handle core functionality and user experience

- Use resources on business-specific customizations

Conclusion: Do not compete with tech giants

Trying to build your own "CompanyGPT" is like trying to create your own version of Microsoft Office. Theoretically possible? Yes.

Practically sensible? No.

OpenAI, Anthropic, Google, and others spend billions on development. They have thousands of engineers. They innovate daily. Your internal "light version" will always be just that - a disappointing light version.

Instead, focus your efforts on:

- How AI can integrate with YOUR unique data

- How you can create workflows that fit YOUR business

- How you can give users the best of both worlds

The question is not: Can we build our own ChatGPT? The question is: Why would we want to?

Let those who are best at AI platforms build AI platforms. You focus on creating value for your business.

Sindre Johansen

:

Jul 15, 2025 9:00:00 AM

Sindre Johansen

:

Jul 15, 2025 9:00:00 AM