Your AI, Your Rules: How Ayfie Puts You in Control of Data Security

In today's AI-powered workplace, the question is not whether to use AI—it is how to use it safely. While tools like ChatGPT have captured the world's...

4 min read

Nadia Aurdal

:

Aug 12, 2025 8:02:23 AM

Nadia Aurdal

:

Aug 12, 2025 8:02:23 AM

Last week, I was sitting in a café when I overheard something every business leader needs to be aware of. The people on the table next to me were enthusiastically describing their relationship with ChatGPT: "It's amazing, I am using it as my personal therapist. I tell it everything about my marriage problems and it gives me better advice then my actual therapist."

Another one chimed in: "I use it for everything! I even uploaded my medical test results to get a second opinion."

Then he added: "I use it for everything at work too. Last week I uploaded our competitive analysis to get further insights on market positioning."

This perfectly illustrates why we need to educate employees about AI security, not just for company protection, but for their personal privacy too. When people do not understand how AI models work and do not put the necessary security measures in place, they unknowingly put both their personal lives and company data at risk.

Here is what many people do not realize: when they share information with AI tools, that data may be used to train models, stored indefinitely, or even appear in search results through shared links (just an FYI, OpenAI removed this feature now).

As Stanford researcher Jennifer King explains, "AI systems are so data-hungry and intransparent that we have even less control over what information about us is collected, what it is used for, and how we might correct or remove such personal information."

The same employees sharing their deepest personal secrets are naturally sharing company secrets too. Not because they are careless, but because they trust these tools without understanding the implications if they do not know the measures they need to put in place.

Research from Cyberhaven shows that 11% of data employees paste into ChatGPT is confidential, and this number is probably higher today. But here is the deeper insight: employees who regularly share personal information have already developed comfort patterns that extend to professional data.

Consider this progression we see repeatedly:

The most successful organizations recognize that employee education about AI security benefits everyone:

When employees understand AI risks, they protect their own:

That same awareness naturally extends to protecting:

A notable incident occurred in 2023 when Samsung engineers pasted confidential source code into ChatGPT for debugging help. They did not realize that OpenAI may retain those inputs for model training. Once submitted, the information entered a system Samsung no longer controlled, leading the company to ban internal use of generative AI tools.

This is where purpose-built tools like for instance, Ayfie Personal Assistant, change the game. When employees have access to AI that respects privacy by design:

A Nordic technology company implemented a brilliant approach:

According to a study done by McKinsey, 71% of their respondents mentioned their organizations use AI regularly in at least one business function. Educating your employees cannot wait. Here is what works:

Start conversations about personal privacy. When employees realize their therapy sessions or health data could be training AI models, they naturally become more cautious with company data.

Use simple analogies: "Using AI is like having a conversation in a crowded café. You never know who is listening or taking notes."

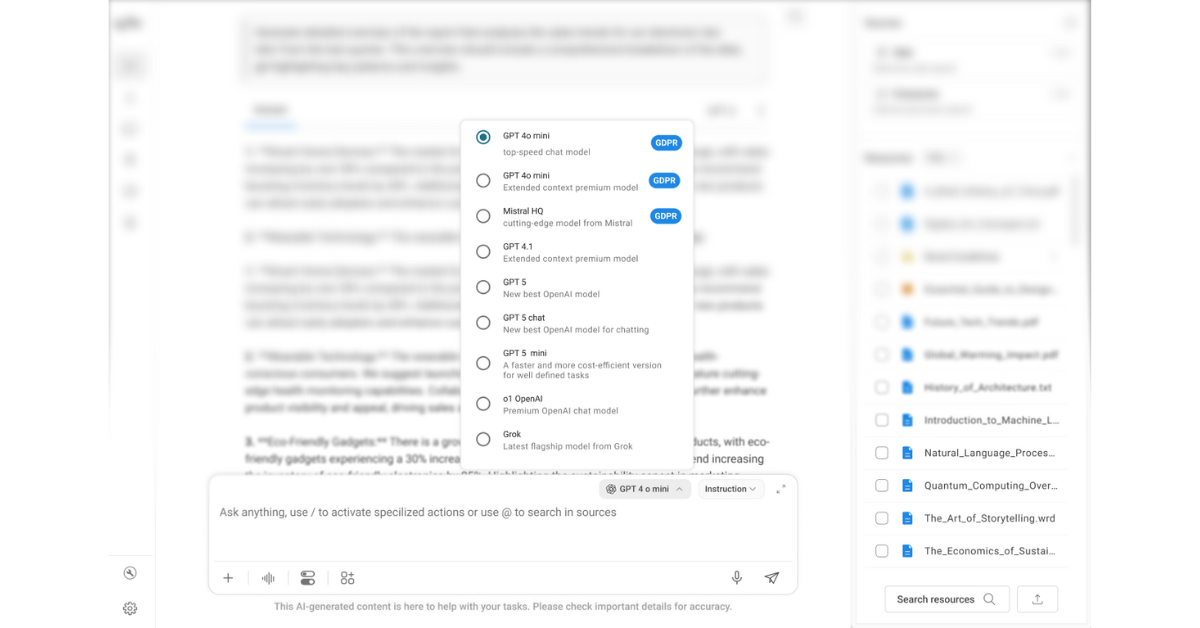

Show how tools like for instance, Ayfie Personal Assistant offer:

Create safe spaces for employees to discuss their AI use without judgment. Many are shocked to learn what they have been sharing.

Here is how to start this crucial dialogue:

When employees understand AI privacy and security, something remarkable happens:

Research shows that 84% of SaaS apps are purchased outside IT. Your employees are already using AI. The question is whether they are doing so with awareness of the implications for their personal lives and your business.

By educating employees and providing privacy-respecting alternatives like Ayfie Personal Assistant, you:

That café conversation was not unique, and hopefully, they had already put security measures in place. However, it happens every day in organizations worldwide. Smart, capable people are unknowingly sharing their most personal information and company secrets with AI systems they do not fully understand.

The solution is not to ban AI or create fear. It is to educate and empower. When employees understand how AI works and have access to tools that respect their privacy, they naturally make better choices for themselves and your organization.

Your next all-hands meeting should include this conversation. Because right now, your employees are sharing their personal struggles and your competitive advantages with the same AI systems. They deserve to know the implications and to have better options.

The future belongs to organizations that protect both personal privacy and professional data. That future starts with awareness and the right tools.

Ready to have this conversation? Your employees and your business will thank you.

In today's AI-powered workplace, the question is not whether to use AI—it is how to use it safely. While tools like ChatGPT have captured the world's...

You have probably heard about the Tromsø municipality scandal, where officials used AI to help write a report about closing local schools, only to...

"We have 10 years of documentation, but no one can find answers to anything."